In the style of some of the earlier posts, I present a simple economic problem, that uses some sort of numerical method as part of the solution method. Of course, we use Julia to do so. However, this time we’re actually relying a bit on R, but don’t tell anyone.

Rust models

In the empirical discrete choice literature in economics, a relatively simple and popular framework is the one that matured in Rust (1987, 1988), and was later named Rust models in Aguirregabiria and Mira (2010). Basically, we consider an agent who has to choose a (in the infinite horizon) stationary policy (sequence of actions), to solve the following problem

\(\max_{a}E\left\{\sum_{t=0}^{T} \beta^t U(a_t, s_t)|s_0\right\}\)

where \(a=(a_0, a_1, \ldots, a_T)\), and \(s_t\) denotes the states. For simplicity, we consider binary decision problems such that \(a_t\in\{1,2\}\). Assume that there an additive shock, \(\varepsilon_t\), to utility such that

\(U(a_t,s_t)=U(a_t, x_t, \varepsilon_t) = u(a_t, x_t)+\varepsilon_t\)

where \(s_t=(x_t,\varepsilon_t)\) and \(x_t\) is usually called the observed states.

The additive and time separable nature of the problem allows us to consider a set of simpler problems instead. We reformulate the problem according to the principle of optimality, and write the problem in its dynamic programming formulation

\( V_t(x_t, \varepsilon_t) = max_{a_t}\left[u(a_t, x_t)+\epsilon_t + \beta E_{s_{t+1}|s_t}(V_{t+1}(x_{t+1}, \varepsilon_{t+1}))\right], \forall t\in\{0,1,\ldots,T\} \)

The object \(V_t\) is called the value function, as it summarizes the optimal value we can obtain. If we assume conditional independence between the observed states and the shocks along with the assumptions explained in the articles above, we can instead consider this simpler problem

\( W_t(x_t) = E_{\varepsilon_{t+1}|\varepsilon_t}\left\{max_{a_t}(u(a_t, x_t)+\varepsilon_t + \beta E_{x_{t+1}|x_t}((W_{t+1}(x_{t+1}))\right\}, \forall t\in\{0,1,\ldots,T\} \)

where \(W(x_t)\equiv E_{\varepsilon_{t+1}|\varepsilon}\left\{V_{t+1}(x_{t+1},\varepsilon_{t+1})\right\}\). This object is often called the ex-ante or integrated value function. Now, if we assume that the shocks are mean 0 extreme value type I, we get the following

\( W_t(x_t) = \log\left\{\sum_{a\in\mathcal{A}} \exp\left[u(a_t,x_t)+\beta E_{x_{t+1}|x_t}\left(W_{t+1}(x_{t+1})\right)\right]\right\}, \forall t\in\{0,1,\ldots,T\} \)

At this point we’re very close to something we can calculate. Either we just recursively apply the above to find the finite horizon solution, or if we’re in the infinite horizon case, then then we can come up with a guess for \(W\), and apply value function iterations (successive application of the right-hand side in the equation above), to find a solution. We just need to be able to handle the evaluation of the expected value inside the \(\exp\)‘s.

Solving for a continuous function

In the application below, we’re going to have a continuous state. Rust originally solved the problem of handling a continuous function on a computer by discretizing the continuous state in 90 or 175 bins, but we’re going to approach it a bit differently. We’re going to create a type that allows us to construct piecewise linear functions. This means that we’re going to have some nodes where we calculate the function value, and in between these, we simply use linear interpolation. Outside of the first and last nodes we simply set the value to the value at these nodes. We’re not going to extrapolate below, so this won’t be a problem.

Let us have a look at a type that can hold this information.

type PiecewiseLinear nodes values slopes end

To construct an instance of this type from a set of nodes and a function, we’re going to use the following constructor

function PiecewiseLinear(nodes, f) slopes = Float64[] fn = f.(nodes) for i = 1:length(nodes)-1 # node i and node i+1 ni, nip1 = nodes[i], nodes[i+1] # f evaluated at the nodes fi, fip1 = fn[i], fn[i+1] # store slopes in each interval, so we don't have to recalculate them every time push!(slopes, (fip1-fi)/(nip1-ni)) end # Construct the type PiecewiseLinear(nodes, fn, slopes) end

Using an instance of \(PiecewiseLinear\) we can now evaluate the function at all input values between the first and last nodes. However, we’re going to have some fun with types in Julia. In Julia, we call a function using parentheses \(f(x)\), but we generally cannot call a type instance.

julia> pwl = PiecewiseLinear(1:10, sqrt) PiecewiseLinear(1:10,[1.0,1.41421,1.73205,2.0,2.23607,2.44949,2.64575,2.82843,3.0,3.16228],[0.414214,0.317837,0.267949,0.236068,0.213422,0.196262,0.182676,0.171573,0.162278]) julia> pwl(3.5) ERROR: MethodError: objects of type PiecewiseLinear are not callable

… but wouldn’t it be great if we could simply evaluate the interpolated function value at 3.5 that easily? We can, and it’s cool, fun, and extremely handy. The name of the concept is: call overloading. We simply need to define the behavior of “calling” (using parentheses with some input) an instance of a type.

function (p::PiecewiseLinear)(x) index_low = searchsortedlast(p.nodes, x) n = length(p.nodes) if 0 < index_low < n return p.values[index_low+1]+(x-p.nodes[index_low+1])*p.slopes[index_low] elseif index_low == n return p.values[end] elseif index_low == 0 return p.values[1] end end

Basically, we find out which interval we’re in, and then we interpolate appropriately in said interval. Let me say something upfront, or… almost upfront. This post is not about optimal performance. Julia is often sold as “the fast language”, but to me Julia is much more about productivity. Sure, it’s great to be able to optimize your code, but it’s also great to simply be able to do *what you want to do* – without too much hassle. Now, we can do what we couldn’t before.

julia> pwl(3.5) 1.8660254037844386

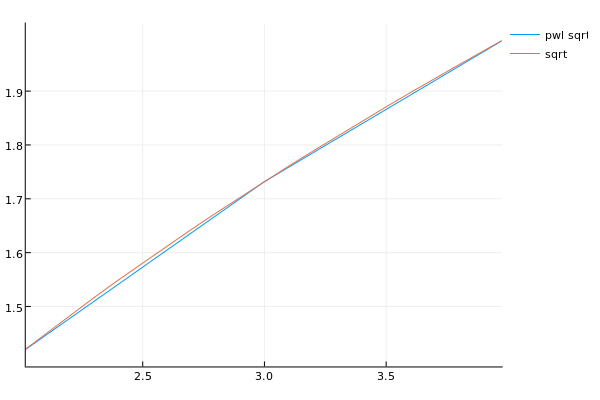

We can even plot it together with the actual sqrt function on the interval (2,4).

julia> using Plots julia> plot(x->pwl(x), 2, 4, label="Piecewise Linear sqrt") julia> plot!(sqrt, 2, 4, label="sqrt") julia> savefig("sqrtfig")

and we get

which seems to show a pretty good approximation to the square root function considering the very few nodes.

Numerical integrals using Gaussian quadrature

So we can now create continuous function approximations based on finite evaluation points (nodes). This is great, because this allow us to work with \(W\) in our code. The only remaining problem is: we need to evaluate the expected value of \(W\). This can be expressed as

\( E_x(f) = \int_a^b f(x)w(x)dx\)

where \(w(x)\) is going to be a probability density function and \(a\) and \(b\) can be finite or infinite and represent upper and lower bounds on the values the random variable (state) can take on. In the world of Gaussian quadrature, \(w\)‘s are called weight functions. Gaussian quadrature is basically a method for finding good evaluation points (nodes) and associated weights such that the following approximation is good

\(\int_a^b f(x)w(x)dx\approx \sum_{i=1}^N f(x_i)w_i\)

where \(N\) is the number of nodes. We’re not going to provide a long description of the methods involved, but we will note that the package DistQuads.jl allows us to easily obtain nodes and weights for a handful of useful distributions. To install this package write

Pkg.clone("https://github.com/pkofod/DistQuads.jl.git")

as it is not currently tagged in METADATA.jl. This is currently calling out to R’s statmod package. The syntax is quite simple. Define a distribution instance, create nodes and weights, and calculate the expected value of the function in three simple steps:

julia> using Distributions, DistQuads julia> bd = Beta(1.5, 50.0) Distributions.Beta{Float64}(α=1.5, β=50.0) julia> dq = DistQuad(bd, N = 64) DistQuads.DistQuad([0.000334965,0.00133945,0.00301221,0.0053512,0.00835354,0.0120155,0.0163327,0.0212996,0.0269103,0.0331578 … 0.738325,0.756581,0.774681,0.792633,0.810457,0.828194,0.845925,0.863807,0.882192,0.902105],[0.00484732,0.0184431,0.0381754,0.0603806,0.0811675,0.097227,0.106423,0.108048,0.102724,0.0920435 … 1.87035e-28,5.42631e-30,1.23487e-31,2.11992e-33,2.60541e-35,2.13019e-37,1.03855e-39,2.52575e-42,2.18831e-45,2.90458e-49],Distributions.Beta{Float64}(α=1.5, β=50.0)) julia> E(sqrt, dq) 0.15761865929803381

We can then try Monte Carlo integration with many nodes to see how close they are

julia> mean(sqrt.(rand(bd, 100000000))) 0.1576136243615477

and they appear to be in the same ballpark.

To replace, or not to replace

The model we’re considering here is a simple one. It’s a binary choice model very close to the model in Rust (1987). An agent is in charge of maintaining a bus fleet and has a binary choice each month then the buses come in for maintenance: replace the engine (effectively renewing the bus) or maintain it. Replacement costs a fixed price RC, and regular maintenance has a cost that is a linear function of the odometer reading since last replacement (or purchase if replacement has never occurred). We can use the expressions above to solve this model, but first we need to specify how the odometer reading changes from month to month conditional on the choices made. We assume that the odometer reading (mileage) changes according to the following

\(x_{t+1}=\tilde{a}x_{t}+(1-\tilde{a}x_t)\Delta x, \quad\text{where }\Delta x \sim Beta(1.4, 50.0)\)

where \(\tilde{a}=2-a\), and as we remember \(a\in\{1,2\}\). As we see, a replacement returns the state to 0 plus whatever mileage might accumulate that month, and regular maintenance means that the bus will end up with end of period mileage between \(x_{t+1}\) and 1. To give an idea about the state process, we see the pdf for the distribution of \(\Delta x\) below.

Solving the model

We are now ready to solve the model. Let us say that the planning horizon is 96 months and the monthly discount factor is 0.9. After the 96 months, the bus is scrapped at a value of 2 units of currency, such that

\(W_T(x)=2.0\)

and from here on, we use the recursion from above. First, set up the state space.

using Distributions, DistQuads, Plots # State space bd = Beta(1.5, 50.0) dq = DistQuad(bd, N = 64) Sˡ = 0 Sʰ = 1 n_nodes = 100 # arbitrary, but could be varied nodes = linspace(Sˡ, Sʰ, n_nodes) # doesn't have to be uniformly distributed RC = 11.5 # Replacement cost c = 9.0 # parameter in linear maintenance cost c*x β = 0.9 # discount factor

Then, define utility function and expectation operator

u1 = PiecewiseLinear(nodes, x->-c*x) # "continuous" cost of maintenance u2 = PiecewiseLinear(nodes, x->-RC) # "continuous" cost of replacement (really just a number, but...) # Expected value of f at x today given a where x′ is a possible state next period Ex(f, x, a, dq) = E(x′->f((2-a)*x.+(Sʰ-(2-a)*x).*x′), dq)

Then, we simply

#### SOLVE V = Array{PiecewiseLinear,1}(70) V[70] = PiecewiseLinear(nodes, x->2) for i = 69:-1:1 EV1 = PiecewiseLinear(nodes, x->Ex(V[i+1], x, 1, dq)) EV2 = PiecewiseLinear(nodes, x->Ex(V[i+1], x, 2, dq)) V[i] = PiecewiseLinear(nodes, x->log(exp(u1(x)+β*EV1(x))+exp(u2(x)+β*EV2(x)))) end

To get our solution. We can then plot either integrated value functions or policies (choice probabilities). We calculate the policies using the following function

function CCP(x, i) EV1 = PiecewiseLinear(nodes, x->Ex(V[i], x, 1, dq)) EV2 = PiecewiseLinear(nodes, x->Ex(V[i], x, 2, dq)) 1/(1+exp(u2(x)+β*EV2(x)-u1(x)-β*EV1(x))) end

We see that there are not 69/70 distinct curves in the plots. This is because we eventually approach the “infinite horizon”/stationary policy and solution.

Simulation

Given the CCPs from above, it is very straight forward to simulate an agent say from period 1 to period 69.

#### SIMULATE x0 = 0.0 x = [x0] a0 = 0 a = [a0] T = 69 for i = 2:T _a = rand()<CCP(x[end], i) ? 1 : 2 push!(a, _a) push!(x, (2-_a)*x[end]+(Sʰ-(2-_a)*x[end])*rand(bd)) end plot(1:T, x, label="mileage") Is = [] for i in eachindex(a) if a[i] == 2 push!(Is, i) end end vline!(Is, label="replacement")

Conclusion

This blog post had a look at simple quadrature, creating custom types with call overloading in Julia, and how this can be used to a solve a very simple discrete choice model in Julia. Interesting extensions are of course to allow for more states, more choices, other shock distributions than extreme value type I and so on. Let me know if you try to extend the model in any of those directions, and I would love to have a look!