This post, and other posts with similar tags and headers, are mainly directed at the students who are following the Dynamic Programming course at Dept. of Economics, University of Copenhagen in the Spring 2017. The course is taught using Matlab, but I will provide a few pointers as to how you can use Julia to solve the same problems. So if you are an outsider reading this I welcome you, but you won’t find all the explanations and theory here. If you want that, you’ll have to come visit us at UCPH and enroll in the class!

This week we continue with a continuous choice model. This means we have to use interpolation and numerical optimization.

A (slightly less) simple model

Consider a simple continuous choice consumption-savings model:

\(V_t(M_t) = \max_{C_t}\sqrt{C_t}+\mathbb{E}[V_{t+1}(M_{t+1})|C_t, M_t]\)

subject to

\(M_{t+1} = M_t – C_t+R_t\\

C_t\leq M_t\\

C_t\in\mathbb{R}_+\)

where \(R_t\) is 1 with probability \(\pi\) and 0 with probability \(1-\pi\), \(\beta=0.9\), and \(\bar{M}=5\)

Last week the maximization step was merely comparing values associated with the different discrete choices. This time we have to do continuous optimization in each time period. Since the problem is now continuous, we cannot solve for all \(M_t\). Instead, we need to solve for \(V_t\) at specific values of \(M_t\), and interpolate in between. Another difference to last time is the fact that the transitions are stochastic, so we need to form (very simple) expectations as part of the Bellman equation evaluations.

Interpolation

It is of course always possible to make your own simple interpolation scheme, but we will use the functionality provided by the Interpolations.jl package. To perform interpolation, we need a grid to be used for interpolation \(\bar{x}\), and calculate the associated function values.

f(x) = (x-3)^2 x̄ = linspace(1,5,5) fx̄ = f.(x̄)

Like last time, we remember that the dot after the function name and before the parentheses represent a “vectorized” call, or a broadcast – that is we call f on each element of the input. We now use the Interpolations.jl package to create an interpolant \(\hat{f}\).

using Interpolations f̂ = interpolate((collect(x̄),), fx̄, Gridded(Linear()))

We can now index into \(\hat{f}\) as if it was an array

f̂[-3] #returns 16.0

We can also plot it

using Plots plot(f̂)

Solving the model

Like last time, we prepare some functions, variables, and empty containers (Arrays)

# Model again u(c) = sqrt(c) T = 10; β = 0.9 π = 0.5 ;M₁ = 5 # Number of interpolation nodes Nᵐ = 50 # number of grid points in M grid Nᶜ = 50 # number of grid points in C grid M = Array{Vector{Float64}}(T) V = Array{Any}(T) C = Array{Any}(T)

The V and C arrays are allocated using the type “Any”. We will later look at how this can hurt performance, but for now we will simply do the convenient thing. Then we solve the last period

M[T] = linspace(0,M₁+T,Nᵐ) C[T] = M[T] V[T] = interpolate((M[T],), u.(C[T]), Gridded(Linear()))

This new thing here is that we are not just saving V[T] as an Array. The last element is the interpolant, such that we can simply index into V[T] as if we had the exact solution at all values of M (although we have to remember that it is an approximation). For all periods prior to T, we have to find the maximum as in the Bellman equation from above. To solve this reduced “two-period” problem (sum of utility today and discounted value tomorrow), we need to form expectations over the two possible state transitions given an M and a C today, and then we need to find the value of C that maximizes current value. We define the following function to handle this

# Create function that returns the value given a choice, state, and period v(c, m, t, V) = u(c)+β*(π*V[t+1][m-c+1]+(1-π)*V[t+1][m-c])

Notice how convenient it is to simply index into V[t] using the values we want to evaluate tomorrow’s value function at. We perform the maximization using grid search on a predefined grid from 0 to the particular M we’re solving form. If we abstract away from the interpolation step, this is exactly what we did last time.

for t = T-1:-1:1 M[t] = linspace(0,M₁+t,Nᵐ) C[t] = zeros(M[t]) Vt = fill(-Inf, length(M[t])) for (iₘ, m) = enumerate(M[t]) for c in linspace(0, m, Nᶜ) _v = v(c, m, t, V) if _v >= Vt[iₘ] Vt[iₘ] = _v C[t][iₘ] = c end end end V[t] = interpolate((M[t],), Vt, Gridded(Linear())) end

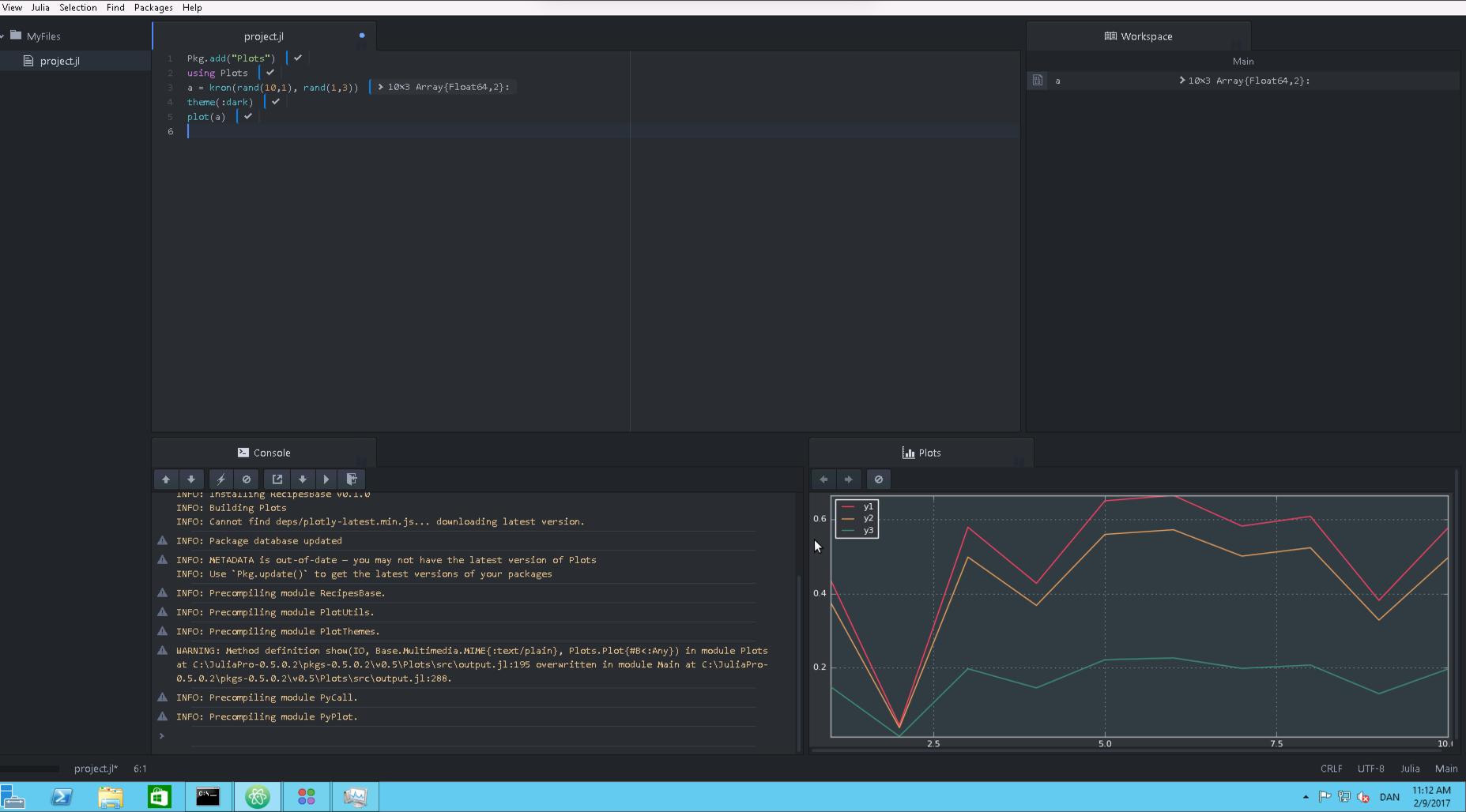

Then we can plot the value functions to verify that they look sensible

Nicely increasing in time and in M.

Using Optim.jl for optimization

The last loop could just as well have been done using a proper optimization routine. This will in general be much more robust, as we don’t confine ourselves to a certain amount of C-values. We use the one of the procedures in Optim.jl. In Optim.jl, constrained, univariate optimization is available as either Brent’s method or Golden section search. We will use Brent’s method. This is the standard method, so an optimization call simply has the following syntax

using Optim f(x) = x^2 optimize(f, -1.0, 2.0)

Unsurprisingly, this will return the global minimizer 0.0. However, if we constrain ourselves to a strictly positive interval

optimize(f, 1.0, 2.0)

we get a minimizer of 1.0. This is not the unconstrained minimizer of the square function, but it is minimizer given the constraints. Then, it should be straight forward to see how the grid search loop can be converted to a loop using optimization instead.

for t = T-1:-1:1 update_M!(M, M₁, t, Nᵐ) C[t] = zeros(M[t]) Vt = fill(-Inf, length(M[t])) for (iₘ, m) = enumerate(M[t]) if m == 0.0 C[t][iₘ] = m Vt[iₘ] = v(m, m, t, V) else res = optimize(c->-v(c, m, t, V), 0.0, m) Vt[iₘ] = -Optim.minimum(res) C[t][iₘ] = Optim.minimizer(res) end end V[t] = interpolate((M[t],), Vt, Gridded(Linear())) end

If our agent has no resources at the beginning of the period, the choice set has only one element, so we skip the optimization step. We also have to negate the minimum to get the maximum we’re looking for. The main advantage of using a proper optimization routine is that we’re not restricting C to be in any predefined grid. This increases precision. If we look at the number of calls to “v” (using Optim.f_calls(res)), we see that it generally takes around 10-30 v calls. With only 10-30 grid points from 0 up to M, we would generally get an approximate solution of much worse quality.

Julia bits and pieces

This time we used a few different package from the Julia ecosystem: Plots.jl, Interpolations.jl, and Optim.jl. These are based on my personal choices (full disclosure: I’ve contributed to the first and the last), but there are lots of packages to explore. Visit the JuliaLang discourse forum or gitter channel to discuss Julia and the various packages with other users.